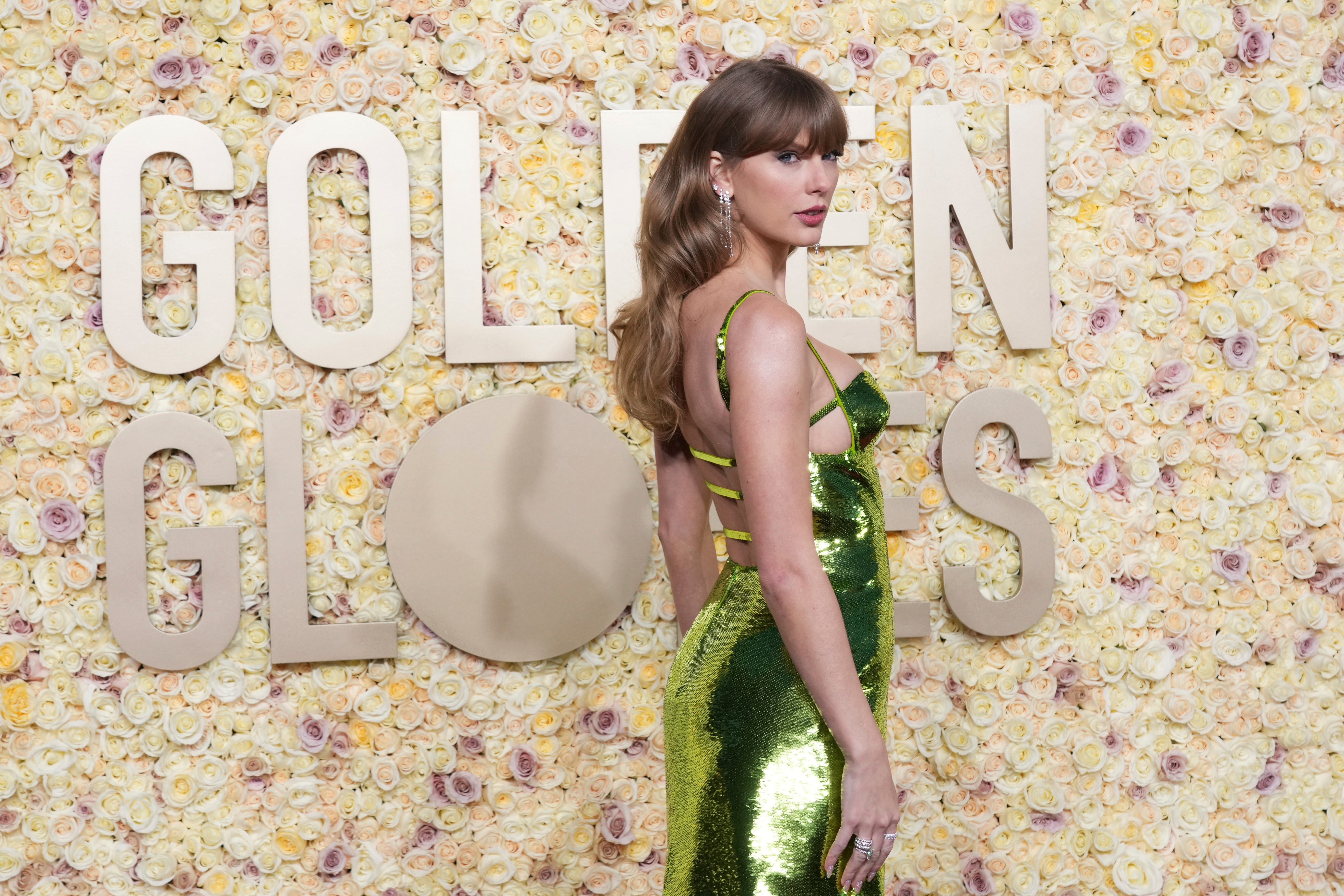

The Taylor Swift deepfakes show why we urgently need AI regulation

We must take stronger measures to prevent the circulation of this material, which enables the abuse of women and girls, writes Labour MP Sarah Owen. After all, if the world’s most famous singer isn’t safe from AI, then how vulnerable are the rest of us?

Hopefully, you haven’t seen the images. But you wouldn’t have to look very hard to find them. Many users of X (Twitter), Reddit and other mainstream social media channels have inadvertently stumbled across them. Twitter claims to be rooting them out, but they are fighting a losing battle with their own technology. Taylor Swift’s friends and family are apparently “furious”, and rightly so. She is reportedly considering legal action.

This raises two questions, both for Taylor and any other victims of deepfake abuse: how on earth were these pictures allowed to be made and shared in the first place, and what legal recourse do victims have?

The truth is that current legislation does little to stop the production of deepfakes or to protect victims. There are no specific bans on the production of many deepfakes, and rules attempting to stop them being shared are extremely difficult to enforce.